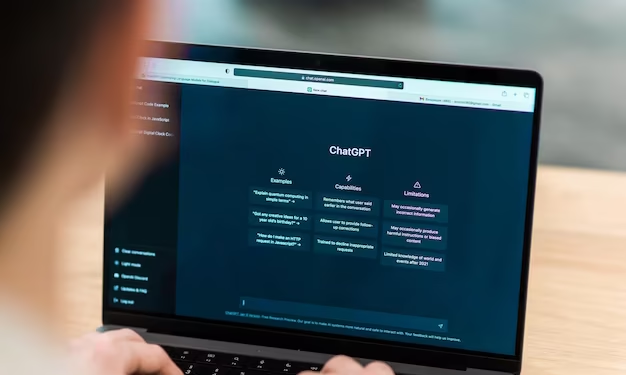

AI therapy in Bali – Picture this: It’s 2AM, you’re sweating through another Bali night, scrolling through old photos, and suddenly – boom – existential crisis hits. Your friends are asleep (or pretending to be), so you do what any modern heartbroken soul would do: you DM ChatGPT.

“Why did she leave me?”

The AI responds instantly – more articulate than your surf instructor explaining tides, more compassionate than your ex ever was. Before you know it, you’re spilling secrets you wouldn’t even tell your warung guy.

Here’s the cold Bintang truth: That “therapy session” isn’t as private as your hidden Instagram story.

Straight from the AI Horse’s Mouth

That’s a direct quote from Sam Altman, CEO of OpenAI, in a recent episode of This Past Weekend with Theo Von.

He wasn’t exaggerating. A huge number of people—especially younger users—are turning to generative AI for advice on relationships, depression, life decisions, and trauma.

“People say the most personal sht to ChatGPT”* – Sam Altman (CEO of OpenAI) said it himself. And he’s not wrong.

We’re talking:

- Midnight breakup confessions

- Quarter-life crisis monologues

- Stuff you wouldn’t even whisper at a sound healing session

But here’s the kicker: ChatGPT has about as much confidentiality as a Canggu beach bartender. No legal protection, no privilege – just your deepest fears floating in the digital void.

No Laws. No Limits. Just Vulnerability.

To be fair, OpenAI isn’t marketing ChatGPT as a therapist. They’re not encouraging users to overshare—but they know it’s happening.

The bigger issue? AI is moving faster than the systems meant to regulate it.

Right now, there’s no clear protection for AI conversations—even if they sound like therapy, even if they’re deeply personal, even if you think no one’s watching.

And let’s be honest: AI isn’t emotionally intelligent. It doesn’t remember you, doesn’t track context long-term, and definitely can’t help in a crisis. It just mimics understanding based on patterns from the internet.

So why are people still pouring their hearts out to it?

Because real therapy is expensive. Because mental health access is broken. Because it’s easier to talk to something that never interrupts.

Why Your ChatGPT Secrets Aren’t Safe – AI therapy in Bali

- No doctor-patient privilege: OpenAI CEO Sam Altman admits they “haven’t figured out” legal protections for your chats

- Data storage = forever: Those midnight confessions? Archived in servers like a dodgy Kuta hostel guestbook

- Bali reality check: Local internet cafes, shared villas = extra vulnerability for digital privacy

“Saw a German digital nomad sobbing while typing to ChatGPT at Dojo. Later found out he’d confessed to tax fraud. Not smart.”

— Wayan, Canggu Coworking Manager

Why This Hits Different in Bali – AI therapy in Bali

This island runs on healing journeys and fresh starts – but replacing human connection with AI therapy is like swapping a Balinese massage for a vending machine rub. Between:

- Digital nomads processing life crises between smoothie bowls

- Local Gen Z seeking mental health support without stigma

- Tourists confessing things they’d never say back home

…we’re creating a perfect storm of unprotected vulnerability under the guise of “self-care”. Your deepest fears don’t belong in training data for future AI models.

Safer Alternatives in Bali:

- Talk to real humans: Try Bali’s expat support groups or KPSI Bali (Indonesian psychologists network)

- Nature therapy: Ocean swims > algorithm chats (and the saltwater’s free)

- Journal on paper: Old school beats cloud storage for privacy

Remember: ChatGPT gives great breakup poetry – but it can’t replace real healing in a place that literally sells soul-searching.

Bottom Line AI therapy in Bali:

Need to vent? ChatGPT’s there. Need actual help? Put down the laptop and talk to a real human. Or at least journal on paper – the original encrypted messaging.

“Saw a guy crying while typing to AI at Dojo. Later heard he confessed to tax fraud. Not smart, bro.”

– Giostanovlatto